Linux Bridge vs OpenVSwitch — How to Improve Virtualization Network Performance

How to fix packet loss and latency in high bandwidth VPS servers by upgrading to OpenVSwitch

Virtualization is one of the most pervasive and transformational technologies in the hosting world to have come along in the last decade. Despite this, maintaining the efficient operation of virtual machines is not always easy. In this article, we’ll go into one of the most common causes of performance problems we see on virtual machines our customers are running, detailing the symptoms, troubleshooting process, and one very effective solution.

One of the most challenging questions we get asked from customers running a Virtual Machine Host Node is ‘Why is the network running badly on this new VM?’ Generally this starts a search of all network infrastructure to ensure proper operation of all aspects of the network, from the host node OS interface itself to the routers handling the traffic. More often than not, this exhaustive search turns up no obvious problems. At all points there are no troubles and all links are operating at full speed. Meanwhile the VM is experiencing high latency, packet loss, and lower than normal speed throughput when it should not be.

At this point, what is the problem? You can continue scouring for ghosts on the network or you can take a moment to consider one common component involved in every node running a hypervisor: the Linux bridge.

The Linux bridge is a neat piece of software that acts as a virtual ethernet switch. This “bridges” the virtual network interfaces for each Virtual Machine and the physical network interface card. Due to this, it performs the management of all traffic from virtual machines, and without it they would not have access to the network at all. Thankfully, this software is installed automatically due to being part of the Linux kernel. It is also simple to configure, and once it is active, you simply tell your VMs to use that as their network interface and the job is done.

However, this simplicity does come with some drawbacks. Most notably, as one of the older solutions to this network problem, it does not incorporate the currently known best practices for ensuring high performance software bridging.

There are some situations where the Linux bridge interface can become overwhelmed which can cause some of the symptoms we mentioned earlier:

Does your setup require > 10gbps networking or have a proportionately high number of VMs compared to most? How about your node being part of a single very large network with lots of mac or IP addresses? These types of situations that generate high packet counts, large amounts of broadcast traffic, or otherwise higher bandwidth requirements can cause the bridge to function at a subpar level or just outright crash.

So what is a good solution to this problem?

This is where we can look at a 3rd party utility to provide a better performing bridge platform that can not only perform better under a higher load, but can also offer additional options for more complicated networking. One of the most popular high performance linux bridge alternatives is OpenVSwitch.

OpenVSwitch provides an alternative to the standard built-in Linux bridge and can be used with many different operating systems and virtualization platforms. In our experience with Proxmox, using OpenVswitch instead of Linux bridge noticeably increased performance of our VMs while also enhancing stability.

If you want to give this a try, you will need to install and configure it. We’ll talk about how to get OpenVSwitch installed with Proxmox.

While OpenVSwitch is more difficult to set up than Linux bridge, it is actually still pretty easy.. FIrst all you need to do is install it from the default Proxmox repository via command line.

‘apt install openvswitch-switch’

It will install all the required packages and that is it for the installation. The configuration is a little more involved. Since you can’t run a Linux bridge and OpenVSwitch interfaces at the same time, swapping to this configuration will require any running VMs to be offline. Because of this, it is more convenient to setup OpenVSwitch when you first set up a server, before you have active virtual machines running.

A simple bridge setup with OpenVSwitch involves 3 interfaces. The physical interface configuration, the bridge interface, and the default vlan interface. Here is the simple configuration for the physical interface

First back up your network interfaces file at “/etc/network/interfaces” and or replace your appropriate physical interface with the following to create an OVSPort. We will be creating the bridge “vmbr0” in the next section, but it is also defined here. Also note there is a VLAN option. This configuration is assuming you have untagged traffic coming into the host node. Since OpenVSwitch wants VLAN aware traffic, we redefine all traffic to be on “vlan1” as the default.

auto ens6

allow-vmbr0 ens6

iface ens6 inet manual

ovs_bridge vmbr0

ovs_type OVSPort

ovs_options tag=1 vlan_mode=native-untagged

Next, you will need to define the actual bridge for your VMs to use. This part is very similar to Linux bridge, but it is using OpenVSwitch instead. We simply setup the bridge that is aware of the various OVS ports.

allow-ovs vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports ens6 vlan1

Finally, the last interface is the vlan interface. As mentioned earlier, OpenVSwitch wants to use a VLAN aware layout. The above traffic will be routed to vlan1 and we define the actual interface here for the host node on the bridge.

allow-vmbr0 vlan1

iface vlan1 inet static

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_options tag=1

address ###.###.###.###

netmask ###.###.###.###

gateway ###.###.###.###

Once all 3 sections here are set up in your network interfaces file according to your network requirements, you should only have to reboot your server to activate the changes.

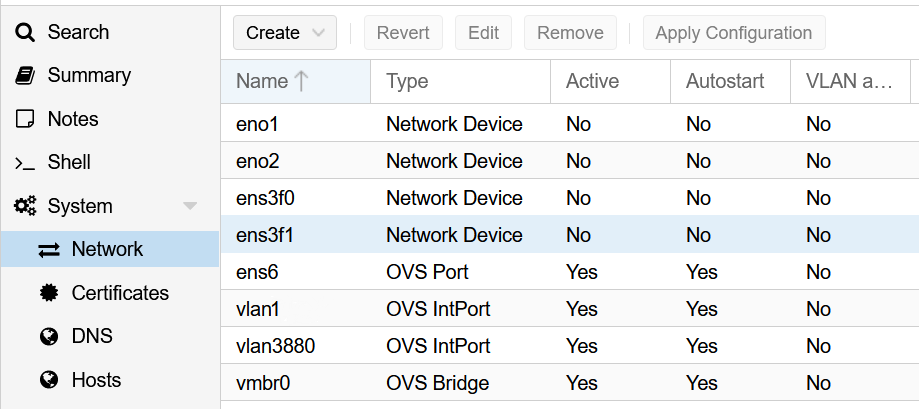

Now that we have the new OpenVSwitch bridge setup, you should be able to see it in your Proxmox control panel with appropriate OpenVSwitch (OVS) interfaces:

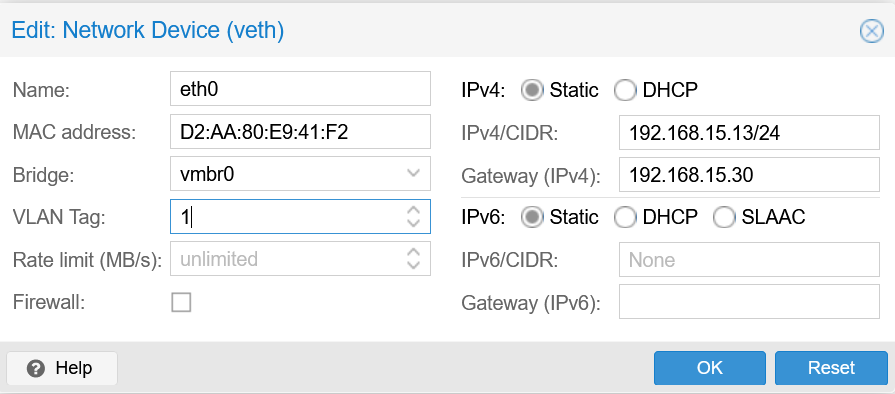

There is one more important thing you need to remember now when creating your virtual machines. Since the traffic needs to be vlan aware for the new bridge to function correctly, you need to have every VM attached to that vlan in their advanced configuration. Simply add the tag number 1 to each as you create it. That’s all there is to it.

This is a very simple configuration and implementation of OpenVSwitch and it is certainly capable of a lot more than just a bridge. To see more available options, such as Bonding or RSTP options, you can refer to the documentation here:

For people interested in learning more about the exact performance characteristics of openvswitch vs linux bridge, this PDF report from the Dept. of Electrical, Electronic and Information Engineering University of Bologna – Italy has a wealth of information on the subject:

Performance of Network Virtualization in Cloud Computing Infrastructures: The OpenStack Case.

In this article we talked about a common problem with virtual machine network performance. Often, this is a problem with the performance of the default “linux bridge” software, which can be overcome by use of OpenVSwitch. We covered how to install and configure OpenVSwitch on Proxmox. The process for other virtualization platforms will differ somewhat but may be quite similar. Implementation of OpenVSwitch can often address these performance issues with linux bridge. As this is something we’ve done for quite a few of our customers, we wanted to share this information with the broader community.

Do you love servers?

We hope this article has helped you learn about improving virtualization network performance. See some of our other articles if you want to know more about similar topics regarding performance optimization, RAID, or server configuration and administration.If you love servers like we do, we’d love to work together! IOFLOOD.com offers dedicated servers to people like you, and as part of that service, we optimize and test the network card driver when installing your OS to maximize performance. To get started today, click here to view our dedicated servers, or email us at sales[at]ioflood.com to ask for a custom quote.