IOFLOOD.com — Phoenix, AZ — Outdoor Dedicated Server Cooling Torture Test — Part 1

Hey everyone. A lot has gone on this past summer, leading me to ask an important question: Can a server running at 100% usage survive outdoors in the Phoenix sun? We seek to answer that question, in a project of mad-scientist proportions. Join us for the IOFLOOD.com Phoenix, AZ Outdoor Dedicated Server Cooling Torture Test.

Hey everyone. A lot has gone on this past summer, leading me to ask an important question: Can a server running at 100% usage survive outdoors in the Phoenix sun? We seek to answer that question, in a project of mad-scientist proportions. Join us for the IOFLOOD.com Phoenix, AZ Outdoor Dedicated Server Cooling Torture Test.

First off, why outdoor servers? And of all places, Phoenix AZ!? I answer that question in part 1 of this multi-part series on dedicated server cooling.

It started this summer with two big developments.

The first thing that happened this summer, I moved to a newly built apartment, here downtown in Phoenix, AZ. The central A/C was working off and on the whole summer, requiring maintenance to attempt repairs about a dozen times before finally 100% fixing it. Never one to accept the status quo, every A/C failure was an opportunity to learn something. How do air conditioners work? How efficient are they? What could break, and why? Could I fix it myself, or possibly cool the apartment by some alternate means? When it’s 115 degrees outside and your A/C isn’t working, it’s not hard to see how the topic of air conditioning could become something of an obsession.

Secondly, this summer there’s been a huge increase in crypto currency interest. The big change here is that regular computers are back in the game, with graphics cards and CPUs once again profitable for “mining” crypto currencies. As this mining process runs these components at 100% usage 24/7, mining uses a lot of power and creates a lot of heat. Obtaining the lowest power and cooling costs are essential to make money with mining.

In my spare time (and using spare colo power), I’ve been experimenting with the best ways mine Monero and Zcash: Learning what CPUs, GPUs, power supplies, motherboards, etc will use the least power and generate the most revenue per dollar invested. At the same time, I’ve learned there is a strong intersection between servers, machine learning, and crypto mining (more on this in another post).

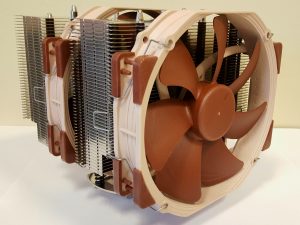

So where does that leave us now? Well, it gave me a finely tuned appreciation for server CPU and GPU cooling, both in terms of heatsinks / fans, and also at the facility level (chillers and hvac systems). The more I looked into it, the more I began to realize: You could save a heck of a lot of electricity if you could run your servers hotter….. a LOT hotter. Meanwhile, some server-level cooling solutions performed better than others, a LOT better.

That got me to thinking: Standard server cooling works just fine at 25c ambient temperatures, operating components well below their thermal limits. Meanwhile, the best coolers can easily achieve temperatures 20c lower than stock cooling. Presumably, these servers would run just fine at 20c higher ambient temperatures, using the right components.

At this point, I did a little math: 25c + 20c = 45c. And 45c = 113f. So just maybe, a 113f ambient temperature might be safe to run servers at, if you do it right. One last bit of math: Phoenix’s 2017 record high temperature was 119f, set on June 20th. This is within spitting distance of my “back of the envelope” possibly-acceptable maximum operating temperature.

So obviously my next thought was: Can you run a server out in the hot Phoenix sun, at full 100% CPU usage, with no air conditioning? There’s only one way to find out.

For part 2, I’ll describe my planned testing process in some detail. Broadly, I’ll start by testing a number of components and configurations in a normal office environment. Next, with your feedback, I’ll then narrow down what components I want to test using outdoor ambient air. And finally, we’ll track the operation and reliability of the hardware from now until the end of the brutal Phoenix summer — may the last server standing win. We do love servers, but on this occasion it’s a 50-shades kind of love.

Want to see us test something specific? Let us know! And stay tuned for Part 2.