Optimal RAID Stripe Size and filesystem Readahead for RAID-10?

What RAID stripe size is ideal for an array of hard drives? What are the default settings for both raid stripe size and filesystem readahead? Are those defaults acceptable? How do these settings impact the performance of my server?

In this post, we’ll go into detail as to what settings are ideal for a server depending upon what type of files it hosts and what type of drives it uses. First we’ll get you the basic answer as to what the best settings are, and then we’ll go into detail as to why that is the case.

In modern operating systems, the default raid stripe size is somewhere between 128KB and 256KB by default. This is also the case for hardware raid cards default stripe size. As well, the file system readahead value defaults to somewhere between 128KB and 256KB in many modern operating systems.

Table of Contents

- When are the defaults OK?

- When should the defaults be changed?

- What are the optimal settings?

- Why does stripe size impact performance?

- How does an optimized RAID stripe improve performance?

- What’s the catch?

- Can we increase this to huge levels to get even more performance?

- But why not increase the readahead and stripe to absurd levels anyway?

- Do you love servers?

When are the defaults OK?

For servers that primarily host a lot of small files, or host their data using SSDs, these default settings are probably fine for both readahead and raid stripe size. In the case of SSDs, these defaults are ok because SSDs are very good at reading small bits of data located anywhere on the drive, and so, the stripe size and readahead has little or no effect on performance for modern SSDs as well. For hard drives, for typical use cases, these defaults are also ok because many files are smaller than these default settings. This means that most single files you read are stored on a single drive, and therefore, reading the file only accesses a single drive. Increasing the stripe size in this situation does not change the drive access pattern much, and therefore, doesn’t impact performance much.

So, for many situations, you don’t need to be worried about these settings and the defaults will be fine. However, in one use case, where many users are simultaneously accessing files larger than 256KB, and those files are stored on regular hard drives, the raid stripe and filesystem readahead settings are critically important to improve drive performance.

When should the defaults be changed?

This type of access pattern is very common for “tube sites” and file download sites. This is because the massive file sizes lend themselves well to less expensive hard drive storage, and large file accesses when using hard drives, is exactly where you can gain a benefit from changing the default settings. In this use case, the default settings massively under-perform compared to an optimized configuration.

For file hosting sites or other use cases where there are large numbers of users simultaneously accessing large files, and these files are stored on hard drives, a large stripe size will give you a huge performance increase compared to a small stripe size.

What are the optimal settings?

For RAID 10 or RAID 0 on regular hard drives, a stripe size of 2MB, if available, is best. If you can’t select a stripe size as large as 2MB, pick the largest value you’re allowed. For hardware raid cards, the maximum stripe size is often 1MB, so this would be the best option in those situations. The default of 256k is not efficient for reading large files from regular hard drives. For SSDs, any value of 128KB or higher should perform fine.

For RAID 5, RAID 50, RAID 6, or RAID 60, a stripe size between 256k and 512k would be ideal for tube sites and large file download sites hosted on hard drives, while a stripe size between 128KB and 256KB would be better when accesses are typically of small files, or when the data is stored on SSD. Why these “parity” type RAID levels are better off with a smaller stripe size is beyond the scope of this article. Suffice it to say if you care about performance, RAID 10 is far better than RAID 5 / 50 / 6 / 60.

For file system readahead settings, the optimal value is typically 512KB so long as your raid stripe is at least 1MB. This will provide the maximum performance for a server that primarily reads large files from hard drives. It is easy to change the readahead setting on a running OS, so you can experiment with different readahead values while the server is running.

However, it is very difficult or impossible to change the raid stripe size after an OS has been installed. Because of these considerations, as a dedicated server hosting provider, IOFLOOD customers rely on us to set up an optimal RAID stripe size when their OS gets installed. Therefore, customer servers are set with a 1MB raid stripe when using hardware raid 10 (the maximum limit for hardware RAID), whenever using traditional hard drives. We also use 1MB or larger stripe size for software raid arrays of 12 or more drives. This is because that configuration will probably be used to store very large files where the larger stripe has a big impact on performance. Getting this optimized properly when the OS is installed can avoid lots of disk performance pain down the road.

Why does stripe size impact performance?

To understand this, it helps to visualize what is actually happening in a hard drive.

A hard drive has a read-write head attached to an arm, and a spinning disk that contains the actual data. To read a file, the arm has to move to the spot on the disk where the data is located, and the data is read from the disk as it spins past the read-write head.

The main limitation on speed of these hard drives, is how quickly the read-write head can move from one spot on the hard drive to another. Generally, this can occur about 100 times per second — allowing you to read files that are located on 100 different drive locations per second as a maximum.

Therefore, to maximize performance, you want to read as much data as possible each time the read-write head moves to a different part of the disk. Operating systems know this, so, whenever data is requested to be read from disk, the OS goes ahead and reads more data than it was asked to, on the assumption the data might be needed eventually. This feature is called “readahead”. In many operating systems, this readahead value defaults to 256KB.

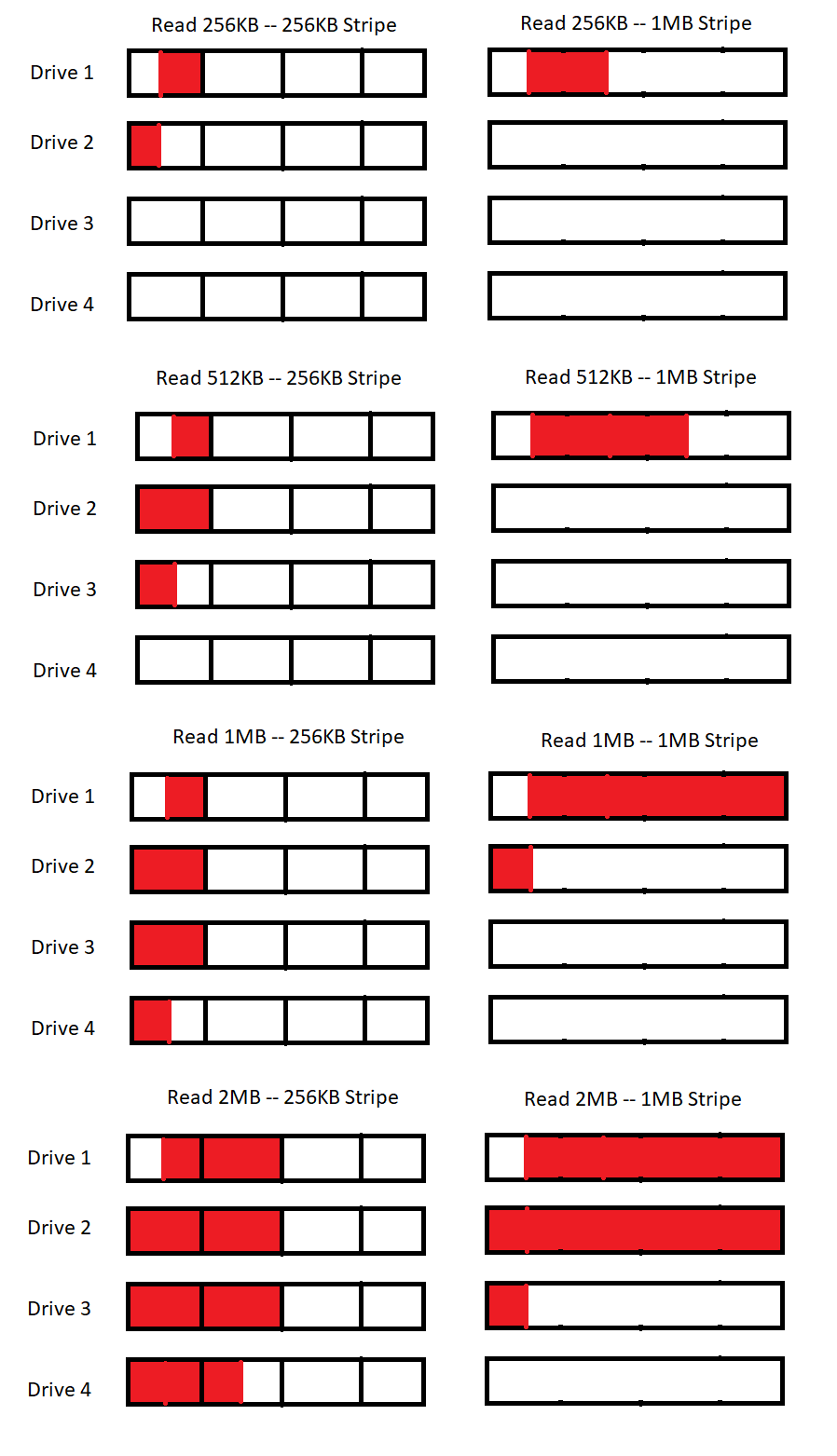

This readahead is a pretty smart idea for hard drives, but it can cause some performance problems with RAID arrays, specifically, RAID arrays with a small stripe size of less than 1MB. Below is a diagram of the read pattern of an 8-drive raid 10 array, with different stripe sizes, and different amounts of data being read. (Note that we show only the first 4 drives — in a raid 10 array, the other 4 drives are a copy of the first 4)

Above you can see which parts of which drives are accessed when performing a read operation of different sizes. Because the start of a file can be anywhere, we indicate the most typical access pattern by assuming the read request begins somewhere in the middle of a stripe.

In the first example, a 256KB read, is very typical because in many operating systems, the default readahead value is 256KB. This means that, even if your application only asks to read 1KB of data from a file, the following 256KB of data from the same file will also be read from disk. If the file is smaller than the 256KB readahead, then the entire file will be read at once. This is why the readahead and stripe values are only important when your typical file size is bigger than the default readahead of 256KB.

Unfortunately, a 256KB stripe is also a common default for raid arrays. So the top-left diagram shows what happens nearly always when using default settings — 256KB of data is read, and, this data is read from 2 different drives from the 4 drive raid 10, 99% of the time. So in this default scenario, 128KB is read from each of two drives, nearly every time. (The read would only occur from a single drive in the rare case where a read quest begins at the exact start of the 256KB raid stripe.)

When changing the stripe size to 1MB, while leaving the readahead to the default of 256KB, you will read the 256KB from just one drive 75% of the time, and it would split the read between two drives 25% of the time. The 25% happens when your 256KB read request begins in the fourth quarter of the stripe. So in most cases, 256KB is read from just one drive, and 25% of the time, 128KB is read from each drive.

Keeping in mind before, each drive can only “seek” to a different part of the disk around 100 times per second. Meanwhile, sequential reads can go about 100MB/s on our example drive (these numbers can be doubled to 200 seeks / sec and 200MB/s on newer drives, but the ratios stay about the same).

So what happens when the default situation causes 128KB reads on each drive, on average, per seek? Well, 128KB read per seek x 100 seeks / second = 12.8MB/s is the maximum performance you’ll get. On a drive that can read 100MB/s, this is only 12% of the maximum speed the drive could perform if it was only doing sequential reads. This is really poor performance, and a big focus on optimizing a RAID array here is to increase this figure.

How does an optimized RAID stripe improve performance?

To improve performance, you want to do your best to increase the amount of data read for each “seek”. This is where the RAID stripe and readahead play a critical role.

First, lets take a look at the 1MB stripe size. In the first example, still with a 256KB read size, 75% of requests will only “hit” one drive, and each request will still read 256KB. 25% of the time, you read 128KB from each drive for each seek. So on average, this means, for each seek, 224KB of data is read from a drive. Assuming 100 seeks / second, this allows for performance of 22.4MB/s per drive, a 75% performance improvement compared to the default settings!

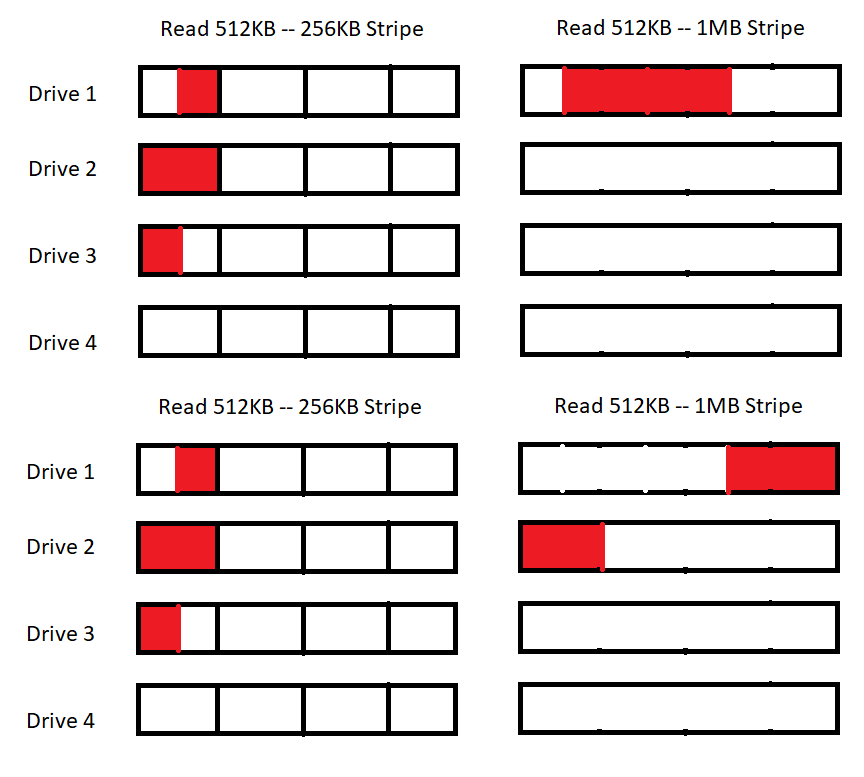

The next way we can increase performance is to increase the readahead on the array. Our second example shows the result of a 512KB readahead value, with either a 256KB or 1MB stripe.

In the image above, you can see what happens for a read request depending upon where within a stripe the read occurs. For the 256KB stripe, in 99% of cases, a 512KB read will “hit” 3 different disks. (If the read is perfectly aligned to the start of a stripe, it will read from 2 drives in order to complete the request, but this is rare.) For a 1MB stripe, a 512KB read will, 50% of the time, only read from one drive, while 50% of the time it will read from 2 drives to complete the request. Which of these occurs depends how far into the stripe the read request begins, as depicted above.

How does this impact performance? The total data read is 512KB, and, for a 256KB stripe, this requires 3 seeks (you read from 3 different drives). So on average, a single seek results in 171KB of data read. As a single drive can do 100 seeks / second, this equals 17.1MB/s per drive. Compared to the 12.8MB/s you saw on a 256KB stripe with 256KB reads, this is a 33% improvement. Pretty good!

How does this 512KB read size perform for a 1MB stripe? Well, 50% of the time there is 1 seek, and 50% of the time there are 2 seeks — an average of 1.5 seeks per read. Reading 512KB, you average 341KB per seek. As a single drive can do 100 seeks / second, this comes out to 34.1MB/s. Compared to the defaults of 256KB readahead and 256KB stripe size, this is a speed increase of 2.6 times as fast! It also gives you 1/3 of the maximum theoretical performance of the drive, a huge increase from the default case.

What’s the catch?

A big caveat here is that these improvements only occur if the file you are reading is at least as big as the readahead setting. The readahead will only read to the end of the file you are accessing. So, for a web site where 90% of requests are for 10KB image thumbnails, none of this will make a difference. Nearly all requests will be for 10KB images, and, with a default readahead of 256KB these 10KB images will already be loaded in their entirety every time. As well, a 256KB RAID stripe means that, almost always, the entire 10KB file will reside on a single disk, so the disadvantages of a small stripe size do not apply when reading files much smaller than the stripe size.

The situation in our diagram, and the value of these optimizations, are most relevant for file hosting or “tube” sites where the average file size is multiple megabytes, not multiple kilobytes.

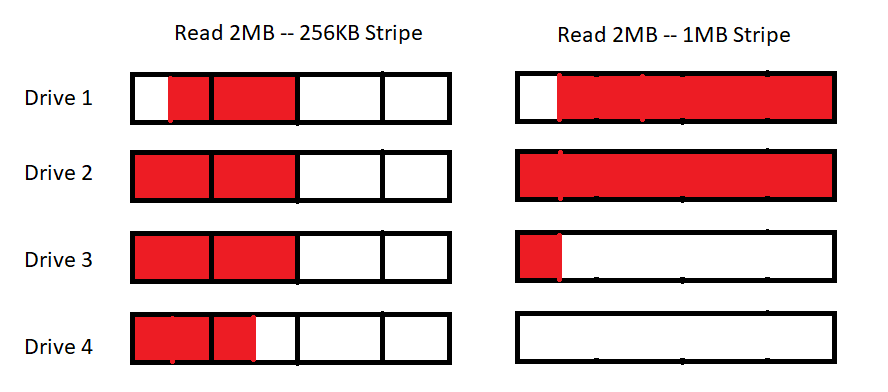

Another catch is that there are diminishing returns on the readahead compared to the maximum stripe size. For our final example, let’s look at a 2MB readahead with a 256KB or 1MB stripe size.

What is the performance here? Well, for a 256KB stripe, you read 2MB split across all 4 disks — an average of 512KB per seek. With 100 seeks per second, even the 256KB stripe array can read 51.2MB / s from each disk — much more than with the default 256KB readahead. In our example drive, this is 51% of the maximum performance the drives are capable of, a huge improvement over a 256KB readahead.

The array with a 1MB stripe does even better — in nearly all cases, a 2MB read will require participation from 3 out of 4 drives. 2MB per read with 3 seeks per read 682KB per seek, or 68.2MB/s for a drive that can do 100 seeks / second. This is getting pretty close to the maximum speed our drive can go of 100MB/s.

Can we increase this to huge levels to get even more performance?

Although these values seem even better than before, you can see the rate of improvement is slowing dramatically. For the defaults, a 256KB readahead and 256KB stripe gave you 12.8MB/s. For a 512KB readahead and 1MB stripe, you got to 34.1MB/s. Increasing the readahead a further 4 fold increased theoretical performance to 68.2MB, so this increases are declining fast.

There is also a problem that, we are assuming that reading the data from the drive takes zero time. When the drive is reading data at 10% of the maximum sequential speed, this oversight is a rounding error. But when you’re dealing with reading data at 40 – 60% of the drives maximum theoretical speed, and you assume these sequential reads take zero time, this introduces a huge error into the equation. The drive cannot spend 50% of its time reading data sequentially and then an additional 100% of its time seeking between different files on disk.

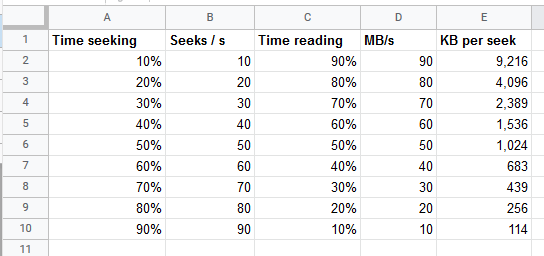

The below diagram shows this tradeoff that you make in real world numbers:

The KB per seek of 128KB in our default example of 256KB stripe and 256KB readahead is very similar to the 90% time spent seeking example in the above chart. This value gives a hypothetical maximum performance of 10MB/s with 114KB average read per seek — very close to our example figures. However, from our previous example, where a 2MB readahead on n a 256KB stripe would lead to a 512KB read per seek, you can see the performance expected fall be between 30MB/s and 40MB/s levels. This is far short of the 51.2MB/s estimated when we assumed that reading data took zero time. Meanwhile, the 2MB readahead with 1MB stripe would lead to 682KB per seek, which exactly matches the 40MB/s level from the table above. This is also far short of the 68.2MB/s we estimated when assuming that sequential reads take zero time.

For this reason, about 40MB/s, or 40% of the maximum sequential read speed of the drive, is about the realistic maximum you can expect for a multi-client server use case. This is true even if you optimize the raid stripe and readahead perfectly. Beyond this point, additional performance becomes far more difficult to achieve, requiring dramatically increased KB read per seek, which has its own negative performance consequences.

But why not increase the readahead and stripe to absurd levels anyway?

It may seem like you can just keep increasing the readahead and always get better performance, but this is not the case. A major caveat is, you don’t want to arbitrarily read multiple megabytes for your readahead. The biggest reason for this is, the data read has to sit in ram until it’s actually needed. If a client is slowly downloading a file, it may take a long time before they need data that’s several MB ahead of what they’ve already downloaded. This data sits in ram as part of the linux file system cache, which is used to store copies of recently read files. To store this new data in the cache, something else has to be removed first. Likewise, if your server needs this ram for some other reason, such as, a different file has just been read from disk, old data will be flushed out of ram. If your end user hasn’t downloaded that data yet, this flushed data will need to be read from the drive again! This is very wasteful of your very limited seeks / second and limited MB/s, forcing the server to read the same data multiple times in order to send it to a user just once.

As well, although a drive can do sequential reads much faster than it can read multiple files, it still takes longer to read a lot of data than a little bit of data, so you only want to read data you’ll actually use. Now that the drive is performing a large percentage of the sequential data transfer it is capable of, there are diminishing returns, and increasing costs, to simply growing the readahead forever.

One way to address this is to manage the amount of ram used for readaheads to a reasonable level, so that the odds are very good that the data will still be in ram when you actually need it. Let’s say that for a server with a good amount of ram (8gb or larger), you don’t want to devote more than 25% of the ram for this purpose. So let’s calculate that out.

For a server with 1,000 simultaneous users downloading files or watching videos, a 1MB readahead means that 1GB of recently read data needs to be kept in ram at all times. This is necessary to prevent this data from being discarded before it is used. For a server with 8gb ram, 1gb ram use is pretty reasonable. There is a good chance this data will not be flushed before you need it.

However, let’s say your server is very busy — it has 10,000 simultaneous connections. Meanwhile, you also are having performance issues, and you think a huge readahead may help with this, so you set it to 10MB. You are hoping that a 10MB readahead and 40MB RAID stripe size will get you to that magic level of 90MB/s reads in the chart from earlier.

However, with a 10MB readahead setting on a server with 10,000 simultaneous users, readaheads will require 100GB of ram! Unless this server 512GB ram or more, it is certain that most of the data you read from disk will be discarded before a user ever downloads it, making your performance problems dramatically worse. As well this is ram that could otherwise be used for larger TCP buffers, which would speed up users downloads. For servers with a huge number of connections, these various caches and buffers are a delicate balancing act.

Do you love servers?

We hope this article has helped you learn about an often-misunderstood and important setting for tuning disk performance. With SSDs becoming ever more popular, there are fewer cases where there is a need to tweak these settings. However, for certain websites hosting large files on hard drives, it is still important to optimize these values. For a RAID 10 array, a filesystem readahead of 512KB and a RAID stripe size of 2MB is ideal (1MB if 2MB is not available). See some of our other articles if you want to know more about similar topics regarding RAID, performance optimization, or server configuration and administration.

If you love servers like we do, we’d love to work together! IOFLOOD.com offers dedicated servers to people like you, and as part of that service, we optimize the RAID stripe when installing your OS to maximize performance. To get started today, click here to view our dedicated servers, or email us at sales[at]ioflood.com to ask for a custom quote.